我愛你,你是自由的。

Gradient Boosted Regression Trees (GBRT,名稱就不用翻譯了吧,後面直接用簡稱)或Gradient Boosting, 是一種用於分類和回歸靈活的非指數統計學習方法。

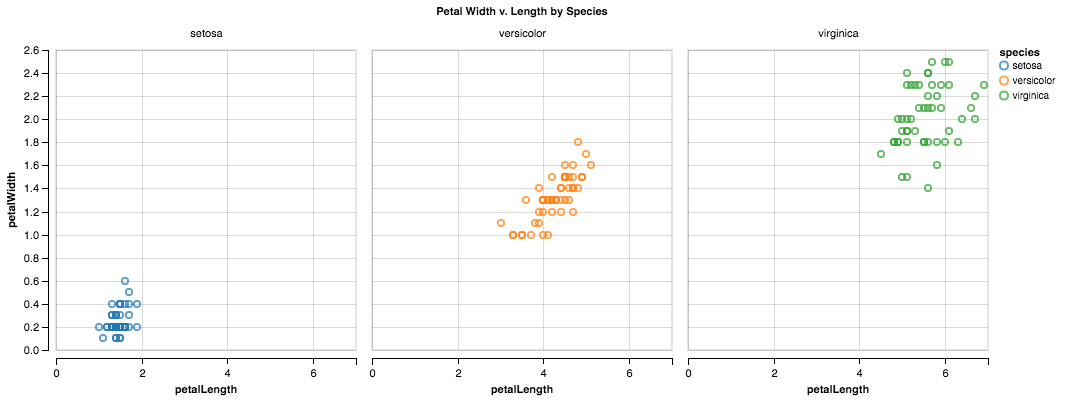

I recently came upon Brian Granger and Jake VanderPlas’s Altair, a promising young visualization library. Altair seems well-suited to addressing Python’s ggplot envy, and its tie-in with JavaScript’s Vega-Lite grammar means that as the latter develops new functionality (e.g., tooltips and zooming), Altair benefits — seemingly for free!

Indeed, I was so impressed by Altair that the original thesis of my post was going to be: “Yo, use Altair.”

In this post we take a tour of the most popular machine learning algorithms. It is useful to tour the main algorithms in the field to get a feeling of what methods are available.

There are so many algorithms available and it can feel overwhelming when algorithm names are thrown around and you are expected to just know what they are and where they fit.

數據類別分布不平衡一直是數據挖掘鄰域的一大問題,當人們所關注的類別的樣本遠少於其他類別的樣本時,就會產生這個問題。數據類別不平衡問題在現實生活中經常出現,已引起研究者的廣泛注意。在本文中,我們首先介紹在數據分布不平衡場景下,分類的一些特性,簡單的回顧了數據分布不平衡在機器學習及現實應用中所造成的一些問題。介紹了用來衡量不平衡數據分類性能的特定指標,並列舉了一些已提出的解決方法。特別地,我們將介紹:數據預處理,代價敏感學習和集成技術三種主要方法,並做實驗,在類內及類間,對這三種方法做了比較。

機器學習算法太多了,分類、回歸、聚類、推薦、圖像識別領域等等,要想找到一個合適算法真的不容易,所以在實際應用中,我們一般都是采用啟發式學習方式來實驗。通常最開始我們都會選擇大家普遍認同的算法,諸如SVM,GBDT,Adaboost,現在深度學習很火熱,神經網絡也是一個不錯的選擇。假如你在乎精度(accuracy)的話,最好的方法就是通過交叉驗證(cross-validation)對各個算法一個個地進行測試,進行比較,然後調整參數確保每個算法達到最優解,最後選擇最好的一個。但是如果你只是在尋找一個“足夠好”的算法來解決你的問題,或者這裡有些技巧可以參考,下面來分析下各個算法的優缺點,基於算法的優缺點,更易於我們去選擇它。

Google’s self-driving cars and robots get a lot of press, but the company’s real future is in machine learning, the technology that enables computers to get smarter and more personal.

– Eric Schmidt (Google Chairman)

We are probably living in the most defining period of human history. The period when computing moved from large mainframes to PCs to cloud. But what makes it defining is not what has happened, but what is coming our way in years to come.

提升一個模型的表現有時很困難。如果你們曾經糾結於相似的問題,那我相信你們中很多人會同意我的看法。你會嘗試所有曾學習過的策略和算法,但模型正確率並沒有改善。你會覺得無助和困頓,這是90%的數據科學家開始放棄的時候。

I Come, I Live, I Experience.